$900M error at Citi in a process with 6-eye checks shows that more controls do not solve all problems

A ‘maker’ sets up a payment, a ‘checker’ checks it and then an ‘approver’ approves it — all letting the same error slip through, resulting in creditors of a defaulting company, to their delight, getting back their money. Recipients refused to return the money and the judge has decided in their favour.

Those who haven’t been involved in financial services operations, wonder how can this happen? Those have been involved, remember all the instances when they escaped by the skin or their teeth!

At the heart of this lies the propensity of humans to make similar mistakes. I will explain this with a simple example from a personal experience. Once a EUR 7,850,649 trade instruction was faxed as EUR7,850,649.

Spot the difference! The maker and the checker, both, so used to seeing a space after the currency, processed it as EUR 850,649 — missing space after ‘EUR’ meant that both glanced over ‘EUR7’ as the currency code.

In capital markets, since institutions deal with each other on a regular basis, it was easier to rectify. Still, there was a loss due to forex fluctuation. Citi has not been so lucky. The Citi example with role of the administrative agent in a loan to a defaulting company is more complex, but the fact that two or three people can make similar mistake, while rare, isn’t impossible for the same reason — humans make similar errors.

Around the time I had to deal with this error, I read about Prof. Regina Barzilay’s work at MIT. Her’s is a fascinating story and her research in healthcare pathbreaking. If a software can read and understand radiology reports, it certainly can read trade instructions, I wondered! There was no reason why we should still suffer losses related to human errors in such transactions. We started a collaboration with her lab but, while I moved out soon after to start my own venture in blockchain based structured finance, the idea remained.

In some ways this idea fructified into the us setting up IN-D.ai in 2019. One of the first use cases we have addressed is process controls. Recently, Ramesh Gopalan, Head of Risk and Controls for Financial Market Operations at Standard Chartered Bank, published a white paper on how they combined cognitive component (from IN-D.ai) with a process orchestration solution for automating the controls testing process, thus increasing the breadth and depth of operational risk audits.

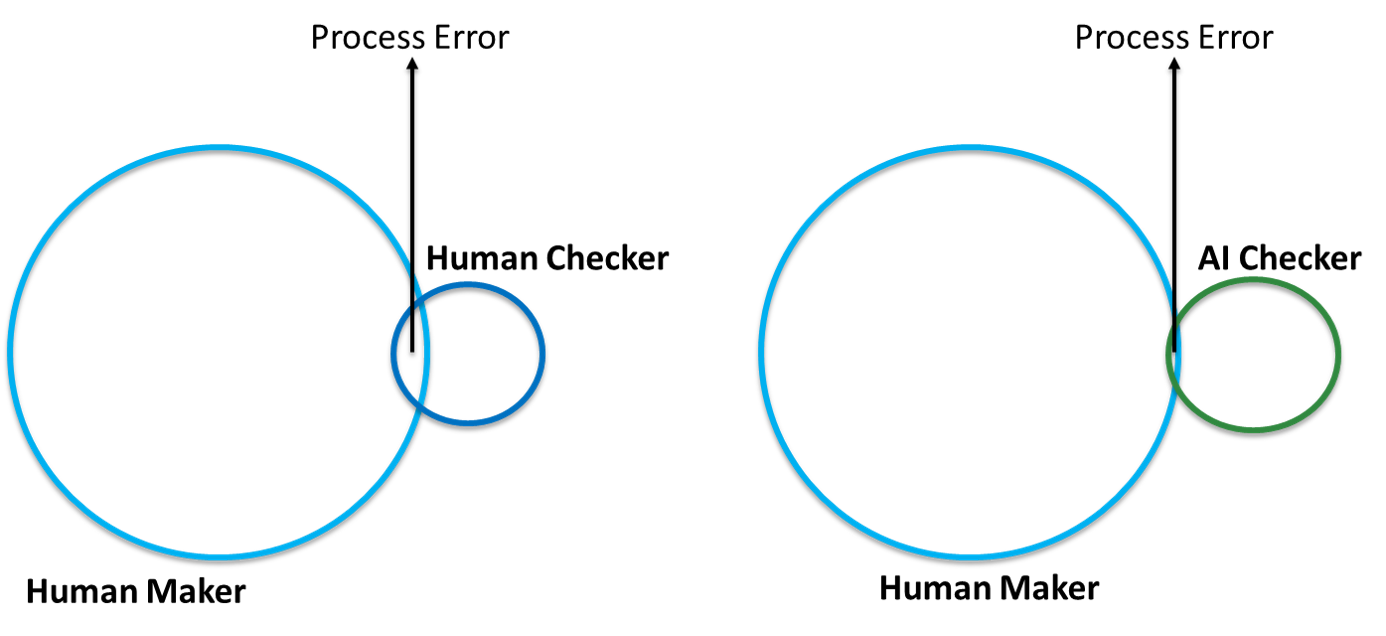

It is not that AI software would not make a mistake, but the likelihood of it making the same mistake as a human is very low. In other words, if you did a Venn diagram of the errors by the human and the AI software, there will be almost nothing at the intersection.

Pic 2 : It isn’t that AI software will not make a mistake, but it is unlikely to make the same mistake as a human being

Use of AI allows us not only to automate controls testing but the control itself. There will be cost savings also, but that is a biproduct — what is important is that it allows us to eliminate certain types of risks. And this will fit in well with the three lines of defense (TLOD) risk management framework.

Some examples of processes where this can work well are:

In India, where an agent is mandatory on a Video KYC, we have included IN-D’s AI solution to do the same check in parallel. For payment processing — AI can do the automation (generate SWIFT message from a payment instruction) or provide the control (match the payment instruction to the SWIFT message created manually).

Question now is where to start with the use of AI in managing operational risk:

1. Automate Controls Testing — Allows to increase the scope and scale of testing at a fraction of the cost (In terms of TLOD, this is using AI as part of 2nd and 3rd lines, while the operational controls remain manual)

2. Automate One Level of Check in a 6-Eye Check Process — Use AI to step in for one of three human beings involved (In terms of TLOD, this brings use of AI into the first line, substituting a human control with AI enabled control)

3. Enhance Controls — In a process without a checker or in those with a checker (4-eye check) where you have been considering an additional control but haven’t implemented due to cost considerations, use AI (In terms of TLOD, this also brings use of AI into the first line, but instead of substituting it adds a control at a small incremental cost)

This approach will ensure enhanced risk mitigation and cost savings will be an add-on benefit. What AI and humans can’t do individually, for risk mitigation, they can do better together!

1. Some details have been changed in to protect confidentiality of actual client operations